UNSW Nuclear Research

Real-time technology reimagines visualisation for nuclear hot cells.

- University of New South Wales, Australia

Inspired by developments in 3D imaging within game development, we worked with UNSW to develop a prototype for how real-time volumetric capture could revolutionise nuclear containment spaces. A low-cost, reliable alternative to traditional hot cells, the prototype used commercially available depth capture technology to reconstruct 3D scenes in real-time on a computer or in virtual reality, increasing both safety and usability in containment environments.

Accessing the inaccessible

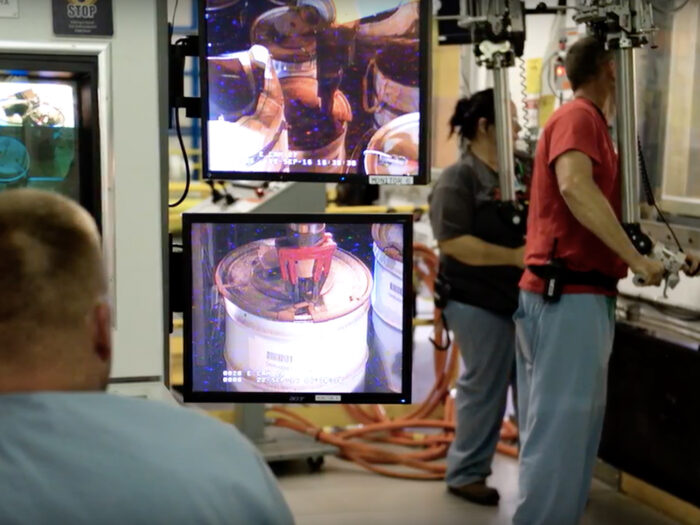

The ProblemWorking in nuclear industries involves dealing with dangerous materials which, unsurprisingly, can’t be interacted with in a normal lab. Instead, nuclear researchers must work remotely, using robot arms or ‘telemanipulators’ to interact with lab environments they see through thick lead windows, 2D video streams or 3D CAD models.

Each of these methods have their own advantages and disadvantages. Lead windows allow engineers to see what they’re doing in real time, but with limited visibility and only one point of view. 2D video screens provide multiple viewpoints, but are often unreliable and disorienting. 3D CAD models allow engineers to navigate three-dimensional space, but don’t account for real time changes in the actual lab.

Remote access in real-time

Our SolutionWhen we began speaking with researchers over at the UNSW Schools of Electrical Engineering and Nuclear Research, it became clear that there was room for innovation. What was needed was an approach that incorporates all the benefits of conventional methods while eliminating their constraints. And, given recent and ongoing advances in game development and 3D scene reconstruction in other industries, we thought this kind of system was, indeed, possible. So we decided to create a prototype.

Our imaging system would use commercial depth cameras and a game engine development platform to create interactive 3D scene reconstructions in real-time. With the resulting virtual environments able to be rendered onto either a computer monitor or a virtual reality headset, this solution would provide a low-cost, reliable and more intuitive way to interact with remote environments.

Capturing a 3D scene

Depth CaptureThe first step of our prototype imaging system involved capturing the physical scene to be reconstructed. To do so, we took advantage of the latest in depth capture and real-time rendering technology. This ensured that the data visualisation platform would be able to achieve higher levels of fidelity, redundancy and interactivity than traditional window and 2D camera imaging solutions.

Using low-cost, fixed-location Microsoft Kinect depth cameras also drastically increased the operational safety of the system, a key consideration given the dangerous nature of nuclear materials. The built-in redundancy of this technology, and the way it eliminates the need for manual camera control after the initial setup, was perfect for the specific requirements of nuclear containment environments.

Real world fidelity

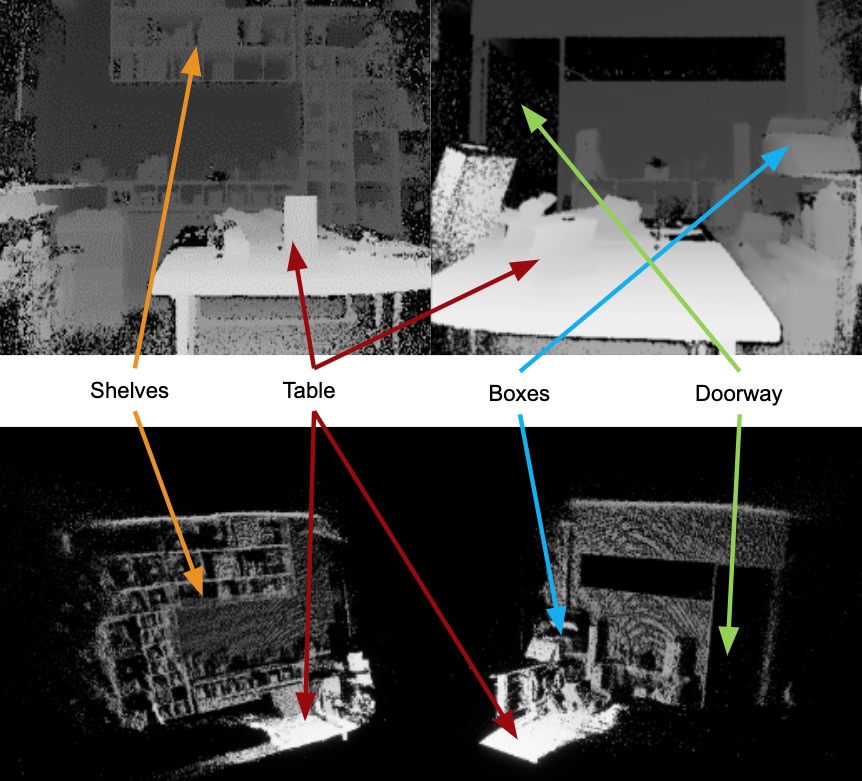

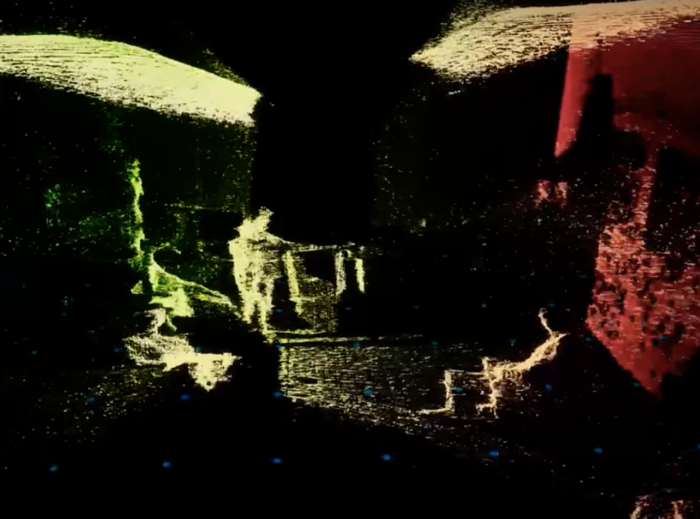

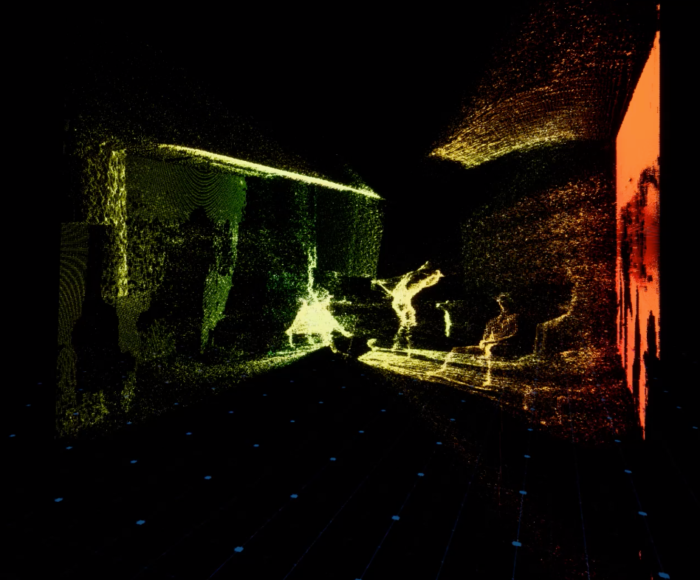

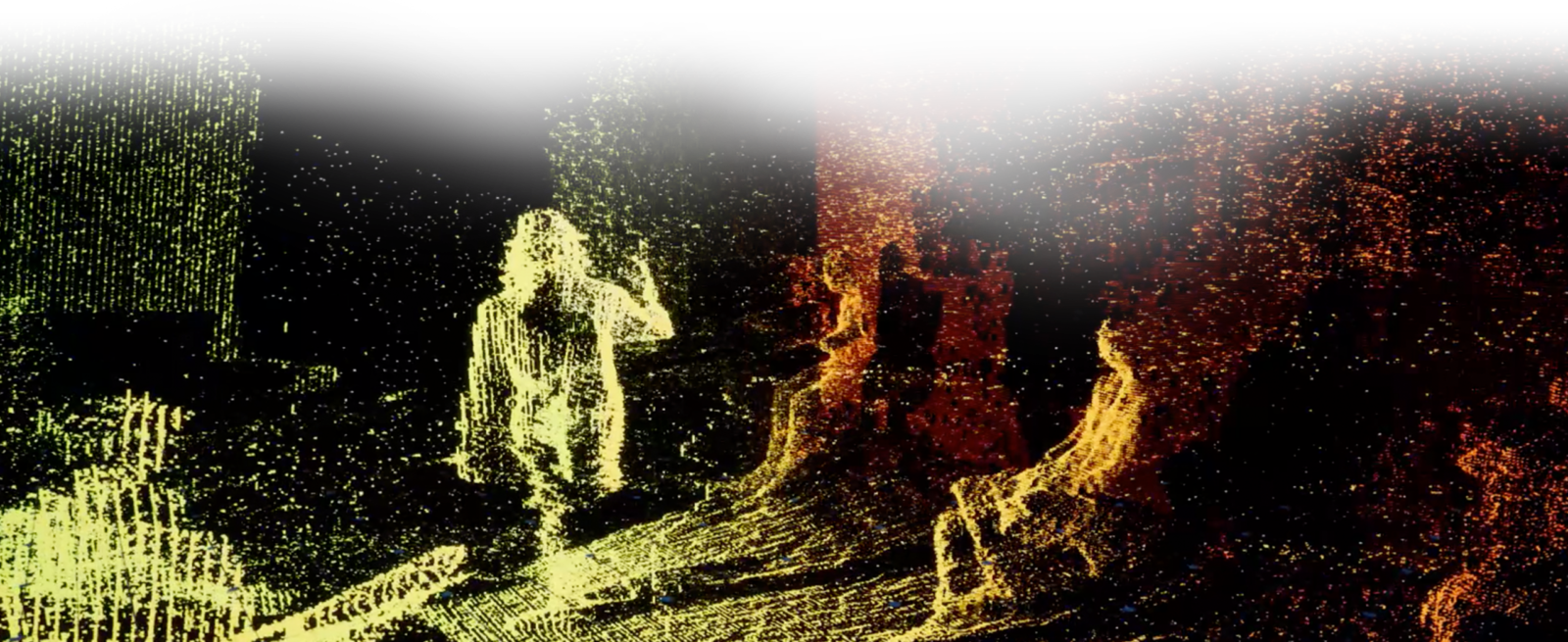

Point CloudsOnce the depth images were captured, we used Unreal Engine to construct separate point clouds for each available image. These point clouds were then calibrated together to form a complete 3D scene reconstruction. While the point cloud data visualisations may not look as polished as traditional virtual reality, they have the benefit of being able to accurately capture and communicate the physical world – and the way it changes – in real time.

Accessibility + scale

ScalabilityOur decision to use commercially available depth cameras and an open source game engine for rendering were important in ensuring the usability and scalability of the data visualisation system. The accessibility of these technologies, and the flexibility of tailoring the system to fit the specific needs of each research project, helped reinforce the prototype’s promise of significant cost savings over conventional options.

Meanwhile, the ability to incorporate any number of depth cameras also meant the prototype was able to capture multiple viewing angles to inform the eventual render. This was important as it would allow researchers to more accurately view and interact with a three-dimensional virtual hot cell environment, with easily scalable levels of resolution and fidelity.

Interaction in real time

Real-Time

Unlike conventional viewing methods, the UNSW Nuclear Research prototype used a gaming engine, allowing users to interact with hotcell environments as they exist in real time. As such, any real world object captured by the depth cameras could be recognised and interacted with inside the reconstructed 3D point cloud.

Importantly, any changes to the environment were also captured and reflected in the virtual world, allowing users to interact with an accurate data visualisation at all times. This improved the usability of the overall system and the intuitiveness of interactions within the virtual world.

Different ways to engage

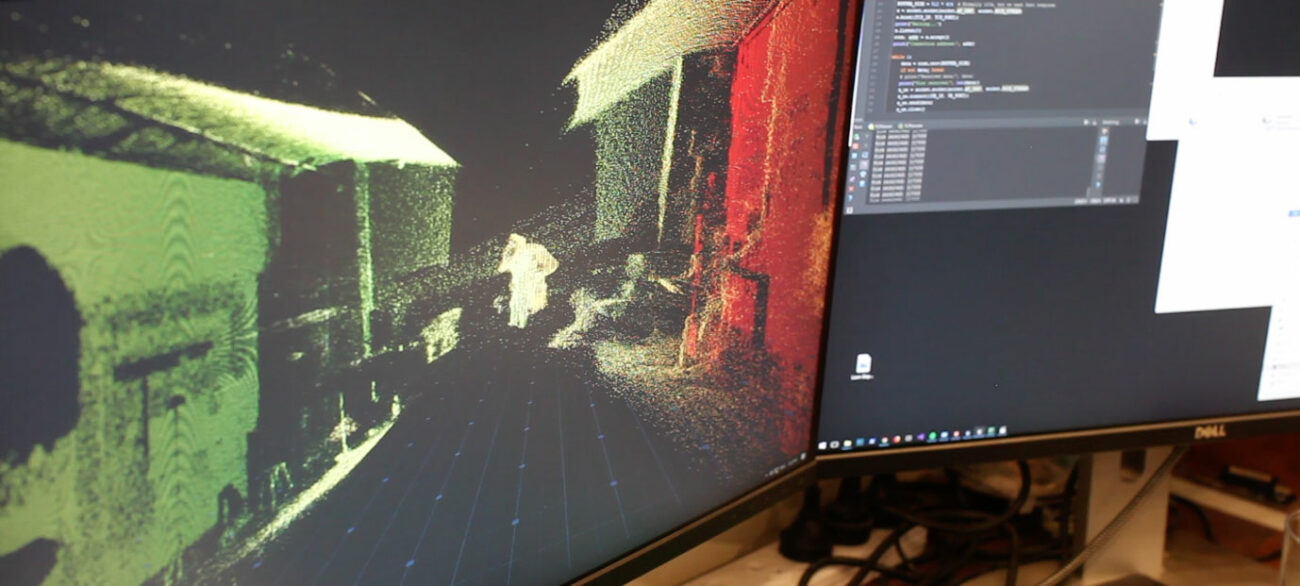

Virtual RealityDeveloping the imaging system in Unreal Engine also allowed us to render reconstructed 3D scenes on both a computer monitor (in first or third person) and on virtual reality platforms. This gave users access to more flexible viewpoints that could be altered depending on their use case, while facilitating intuitive, real-time interactions within the contained environment.

Intuitive interactions

Usability + SafetyThe ability to render scenes onto a virtual reality headset gave our prototype a unique edge over all other visualisation methods in the industry. This choice would allow users to navigate intuitively and without constraint through a reliable and continuously updated 3D reconstruction.

In this way, the system represented a significant step forward for interaction within nuclear containment. It demonstrated how the intuitiveness of direct, real-time interaction with 3D scene reconstruction has the potential to improve the usability, safety and reliability of hot cell facilities.

What comes next

Next StepsTo date, our research team has successfully designed and developed the prototype of this real-time imaging system, proving its ability to capture depth images and convert them into a reconstructed 3D scene able to be used and updated in real time. First demonstrated at the HOTLAB 2017 Conference in Japan, this prototype has received significant interest from nuclear engineers and hot cell manufacturers alike.

The quality and reliability of the prototype system has also shown potential in other industries. Indeed, with simple additions such as informational overlays on top of reconstructed scenes, there is clear potential for this kind of real-time technology in training and demonstration where even pre-rendered virtual reality environments are proving effective educational tools.

Stay in the loop

Subscribe to our newsletter to receive updates and insights about UNSW Nuclear Research and other S1T2 projects.